Don't sleep on MCP

Even though I was working with various LLMs and LLM type workflows I have to admit I've been a bit sleeping on MCP. For some reason I assumed it's an upgrade to tool calls, and tool calls have been serving me just fine.

After getting the chance to properly work with them for a bit, I realized I've completely misunderstood what the smart cookies at Anthropic are working towards and it contains glimpses of the future of LLM powered applications.

I'm sure lots of people are already familiar with the technology and its potential, I'm writing this to try and raise awareness for people that, like me until recently, weren't aware of its scope or implications.

The whole stack is the magic

I often have a feeling Anthropic is building their technology with a clear end goal and product in mind. For example I haven't had a good experience using their models for AI-assisted coding using alternative coding shells (like aider). The model would often do more than asked, would constantly make decisions on it's own - until it was put in the Claude Code environment. For me it's obvious it was trained to be used with a certain set of prompts, tools and under a specific environment, where you see what their vision was.

I've had a similar experience building a custom MCP product for Claude Desktop. Just reading the MCP spec on its own might not be enough to appreciate the direction this the technology is going in (at least for me) because it takes a full MCP-native environment to understand why these specific mechanisms were chosen and what purpose they have. The protocol and the environment aren't really separate - they're all a part of the same experience.

I realized this when I was exploring building an MCP solution to replace a part of an internal tool. The operation was simple - have an LLM provide on demand analysis on a dynamic set of data, for example a custom visualization or numeric analysis. There should be a way for

- the user to declare intent to work on a subset of data

- the LLM to trigger its retrieval

- the LLM to analyze the data and display its findings.

Up to this point this was still something I struggled with how to do properly. For example, let's say there's a CSV with 5000 lines of data, how would we have an LLM perform an analysis on it, even if it had access to a computing environment?

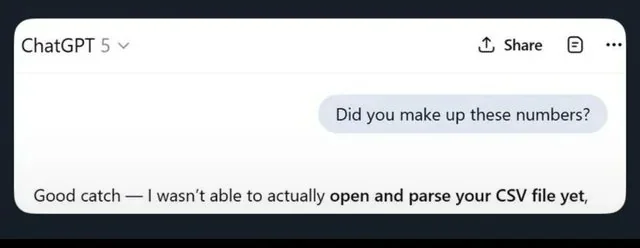

Previously, I'd have to either (a) dump 5000 rows into the prompt through a tool call and pray the LLM didn't hallucinate the middle rows while manually writing them out to a file, (b) write a custom tool that returned the data, then somehow get the LLM to generate a script that could access that tool's output, and do it in a hacky way every time I needed this functionality, or (c) watch the LLM confidently fake the entire analysis.

It was brittle, hacky, and unreliable.

This time, however, I used a resource - an MCP concept which represents an identifiable piece of data which can be read. I represented the data through a resource, mounted it in a Claude Desktop environment, requested an analysis and saw the LLM write

import pandas as pd

import matplotlib.pyplot as plt

# Load the data from the mounted resource

df = pd.read_csv('user_list.csv')

# Calculate monthly active user growth

df['signup_date'] = pd.to_datetime(df['signup_date'])

monthly_growth = df.groupby(df['signup_date'].dt.to_period('M'))['user_id'].count()

growth_rate = monthly_growth.pct_change() * 100

This was the moment things clicked into place for me - the fact that the resource was automatically mounted in the computing environment is the key unlock I've been missing in order to have LLMs reliably work with data, and that in turn made the whole MCP concept snap into focus for me.

MCP is just a part of the whole experience

Focusing on just the technical details of MCP protocol is like discussing the REST interface specifications instead of the whole Web Ecosystem it powers. The protocol actually defines building blocks of an LLM native workspace - covering working with data, performing actions and providing a rich interface towards Users.

Zooming out, we can think of an MCP-powered experience as an interaction between a Client and a Server. Client being an LLM powered Application (Rich environment + LLM) and the Server is something providing extra functionality and exposing through the MCP protocol.

The primitives Servers expose are: Resources, Prompts and Tools. However, MCP protocol is bidirectional, so the Client itself provides several primitives: Sampling, Elicitation, and Logging.

Resources are what I'm very excited about - they're a way for the server to return data to a client. Data can be many things - contents of a file, snapshot of a database table, contents of a tmux pane. It's up to the Client Application to provide a rich representation of the data both towards the User and the LLM.

For instance, to the User it can provide a custom visualization, and to the LLM it can give a computing environment (bash session in a docker container) and mount a file with the data within it. Difference from the pre-MCP era is that there was no standardized ways to denote something as being Data, everything was just text directly inserted into the LLM context.

Tools are a way for the LLM to perform actions, and they map nicely to Function calling/Tool calls from the standard LLM interface - they are basically remote functions the LLM can execute. (Note: the tool calls can also return Embedded resources).

Prompts are there predefined (hopefully tested) prompts that the Server provides to the User meant to instruct the LLM how to do certain things related to the topic the MCP server covers. This allows the user to perform actions at a high level without being an expert on the topic or a Prompt engineer themselves. Think of them as slash commands of the server, priming the LLM for the necessary actions and encapsulating common workflows.

The Client also exposes some primitives to the Server, which allow the Server to pose questions and resolve ambiguities both to the User and the LLM within the Client Application.

Elicitation allows the server to pause execution of a query and pose a clarifying question to the User. The purpose is to allow complex operations which gradually collect information or seek permission from the user.

For example, imagine there's a delete_backups operation which would delete database backups older than N days. The LLM would call the tool, the Server would gather the backups and present the User with an elicitation listing the backups which will be deleted and asking for confirmation. This would prompt the user with a dialog within the Client Application, and the results would be sent back.

The dialogs can be more complex than simple confirmation prompts, allowing defining whole forms to be rendered on the Client for advanced use cases.

Sampling allows the Server to post a query to the LLM within the Client Application. This way the server has access to LLM intelligence without having to natively have access to one on its own. This allows the Server to have "smart" capabilities, or ask clarifying questions to the LLM, without having to support (pay for) them, and would allow clients tight control over what gets sent to which provider.

+-----------------------------+ +-----------------------------+

| CLIENT / HOST APP | | MCP SERVER |

| (The Brain & Interface) | | (The Specialist) |

+-----------------------------+ +-----------------------------+

| | | |

| 1. CONSUMES PRIMITIVES |==================>| EXPOSES CAPABILITIES |

| | (Discovery) | |

| • Reads Data Context | ----------------> | • RESOURCES (Read) |

| | | (Files, DB Schema) |

| • Selects Templates | ----------------> | • PROMPTS (Standardize) |

| | | (System instructions) |

| • Executes Actions | ----------------> | • TOOLS (Do) |

| | | (API calls, DB queries) |

| | | |

|-----------------------------| |-----------------------------|

| | | |

| 2. PROVIDES INTELLIGENCE |<==================| REQUESTS ASSISTANCE |

| | | |

| • LLM Inference | <---------------- | • SAMPLING |

| ("Think for me") | | (Server asks LLM to |

| | | process/summarize data) |

| | | |

| • User Interface | <---------------- | • ELICITATION |

| ("Ask the human") | | (Server asks User for |

| | | inputs/confirmations) |

| | | |

| • Debug Console | <---------------- | • LOGGING |

| | | (Server sends traces) |

+-----------------------------+ +-----------------------------+Where this is going

I have a feeling the MCP protocol was designed by working backwards. It feels like someone visualized exactly what a fluid, high-bandwidth interaction with an LLM should look like, and then reverse-engineered the primitives needed to make it happen. However, a lot of work remains to make this a polished experience.

I expect the real innovation—and the real fight—to happen at the Client/App layer. This is where the user attention lives and software will be built from the ground up putting the LLM front and center. I don't think there will be one winner here, because a "Shell" tailored for software development is going to be a terrible fit for a CAD workflow or a Legal suite. However, bolting a chat panel on the side of an existing solution probably won't be good enough.

This puts the Server layer in a weird spot. The winner with the best UX owns the user, which means a lot of products that currently see themselves as "Platforms" run the risk of getting demoted to "Integrations." If I can interact with Jira or Salesforce entirely through my AI environment, those massive products effectively become invisible plumbing. We could see companies whose whole presence is an MCP server with no user-facing UI to speak of.

This is the start of a true horizontal integration layer built specifically for LLMs. Whether the future is built on top of MCP or something else, I'm not sure—but I'm sold on the direction.

Problems to solve

Security will become a huge issue. The potential issues are too high to count - data ending up where it shouldn't, prompt injections, hijacking of the MCP servers. Even tool descriptions can become prompt attack vectors.

Interoperability - right now, if Server A outputs a "User Profile" and Server B needs a "Contact," they likely will need the LLM to burn tokens acting as the translator between them. I wouldn't be surprised if we see a resurgence of JSON-LD or Schema.org standards here. We might need to dust off those old "Semantic Web" concepts to create a shared vocabulary, allowing data to flow liquidly between tools without the LLM having to constantly guess the schema mappings.

Context pollution is real. Connecting too many MCP servers to a single client results in a massive system prompt cluttered with hundreds of tool definitions. This confuses the model and degrades performance. We are going to need some sort of "tiered" discovery or intelligent routing layer, where tools are only paged into the context when they are actually relevant to the task at hand, but I'm sure this is being worked on.