Model your interactions, not your messages

Every now and then I come up on some concept/technique/approach in software development that just makes me go "huh..." and usually it's an obvious or a simple thing that for some reason I didn't consider doing or using properly. The previous time this happened was when I realized that manually setting flex-basis lets me control element sizing regardless of content - solving overflow issues I'd been fighting for months.

This time it's about storing messages powering LLM interactions. I've been developing LLM-powered apps using the Phoenix/Elixir stack for a while now, and as all Elixir developers, my first inclination was to keep the chain of messages usually in some GenServer state (whether it's in a LiveView assigns or a separate GenServer, doesn't really matter). This chain would usually be persisted as a list of messages with a role/content pair which would be updated as the conversation goes or loaded on reload.

This usually works fine until you want to implement things that require a more fine grained control over the chain or multi-agent systems, for example

- Compacting the chain

- Controlling the system message based on context

- Dynamically controlling the context provided

- Compacting old tool call results

- Triggering workflows and presenting the final message as result

- Complex document management (something I try to do is pass the document directly until my own document processing pipeline finishes, then use the processed version)

- Using one LLM to dynamically provide feedback to the driver LLM

The huh moment

I've been investing time to learn about building things with the Ash framework and one of the things I've looked at is the new ash_ai extension. My focus currently isn't on the extension itself but at aha moment I had when looking at their reference implementation, in particular their Respond change which is in charge of taking a chain of messages and triggering a response.

It might not be groundbreaking, but what caught me off guard is that they keep reloading the chain from the database and rebuilding it at every user message. As soon as I saw this, things clicked for me and I saw it as an unlock of several issues I've been facing so far.

For example, at a project I'm building - Reynote there's a system of observer AI systems that monitor a conversation and provide live feedback both to the user and the LLM. My initial Elixir-influenced instincts led me to implement something like this, which in hindsight, is way over-complicating it.

defmodule KaplRuntime.TherapySessions.TherapySession do

use KaplRuntime.Infra.Supervisor,

registry: KaplRuntime.TherapySessions.Registry,

prefix: "therapy_session"

@observer_modules [

KaplRuntime.TherapySessions.Agents.SolutionFocusedObserver,

KaplRuntime.TherapySessions.Agents.CognitiveBehavioralObserver,

KaplRuntime.TherapySessions.Agents.CommunicationPatternObserver,

KaplRuntime.TherapySessions.Agents.NarrativeObserver,

KaplRuntime.TherapySessions.Agents.MotivationalInterviewingObserver,

KaplRuntime.TherapySessions.Agents.EmotionalProcessObserver

]

@partner_perspective [

KaplRuntime.TherapySessions.Agents.PartnerPerspectiveObserver

]

def children do

[KaplRuntime.TherapySessions.Agents.Therapist] ++ @observer_modules ++ @partner_perspective

end

def get_observers(_user_id) do

@observer_modules

end

end

Which basically sets up several GenServers communicating over a shared PubSub channel. As you can imagine things got out of hand quickly, especially if the message chain needed to be altered - some agents needed only a subset of messages, some had mechanisms others didn't need (time-keeping, context and memory management...).

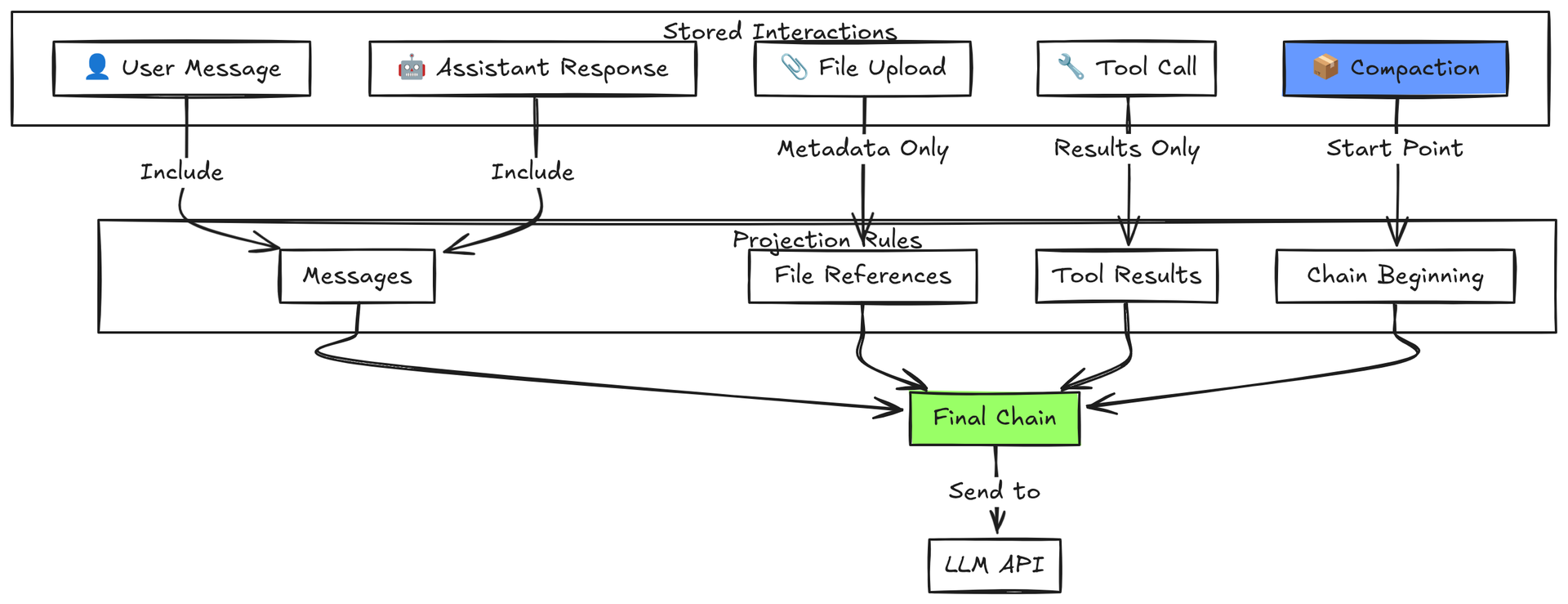

Once my mental model changed from storing specific messages to thinking about the problem more like an event-sourcing type problem where I model the whole set of interactions and build a projection on top of it, I saw a way to solve a lot of the aforementioned issues.

The Pattern in a Nutshell

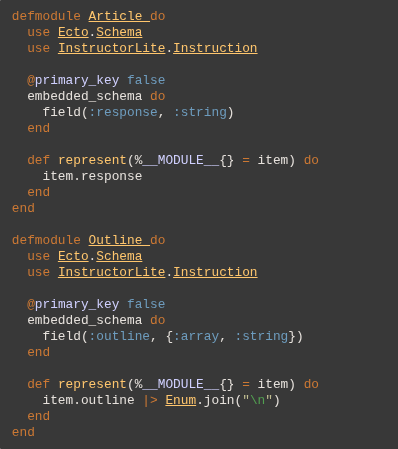

Instead of maintaining a mutable message chain in memory, we:

- Store rich interactions - not just role/content pairs, but typed interactions with metadata

- Rebuild projections on demand - each request loads from DB and builds the appropriate view

- Let the database be the source of truth - no more syncing between memory and persistence

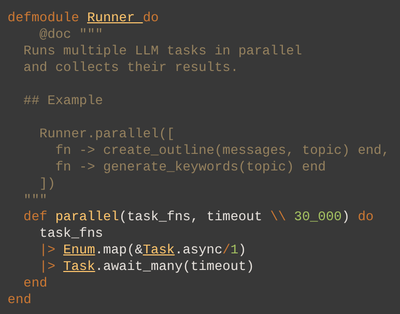

Here's a simplified example of what this looks like:

# Instead of this:

messages = [

%{role: "user", content: "analyze these contracts"},

%{role: "assistant", content: "I'll help you with that..."},

# ... hundreds of messages later ...

]

# We store this:

interactions = [

%{type: :user_message, content: "analyze these contracts"},

%{type: :file_upload,

metadata: %{filename: "contract.pdf", status: :pending}},

%{type: :file_upload,

metadata: %{filename: "invoice.txt", status: :processed},

content: "Invoice #1234\nAmount: $5,000\nDue: Net 30..."},

%{type: :assistant_response, content: "I see you've uploaded two files..."},

# ... many interactions later ...

%{type: :compaction,

status: :completed,

content: "User uploaded contract.pdf and invoice.txt for analysis.

Discussed payment terms (Net 30), liability clauses..."},

%{type: :user_message, content: "What about the termination clause?"},

# ... only recent interactions after compaction ...

]

# And rebuild chains dynamically:

def build_chain(session_id) do

interactions = load_from_db(session_id)

interactions

|> start_from_last_compaction() # Skip everything before completed compaction

|> transform_files_to_metadata() # Include parsed content or just metadata

|> format_as_messages()

endWhat This Unlocks

This simple shift in thinking - from managing mutable state to rebuilding projections - suddenly makes previously complex features almost trivial:

- Compaction? Just another interaction type that future rebuilds can use as a starting point

- Multiple agents? Each builds their own view from the same interaction history

- Document management? Store metadata as interactions, fetch content on demand (tool calls)

- Branching conversations? Just filter interactions differently

- Audit trails? You already have the complete history

The beauty is that this pattern works regardless of your stack. Whether you're using Ash, Ecto, or even a different language entirely - the core insight remains: stop thinking about message chains as something to carefully maintain in memory. Think of them as projections you build from a richer interaction history.

In upcoming posts, I'll dive deep into specific implementations - smart compaction strategies, document management at scale, and multi-agent orchestration. But even if you just take away this one idea - rebuild your chains, don't maintain them - you'll find a whole class of problems becomes much simpler. Sometimes the best solutions aren't about adding complexity - they're about finding the right mental model.